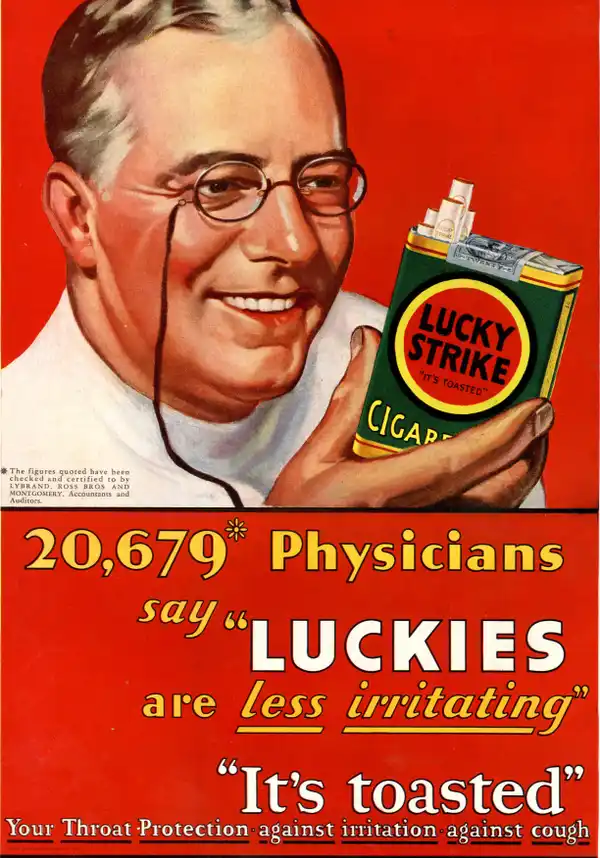

One of the things that annoys me most about the testing publishing industry, especially ACT and College Board, is how much it behaves like the Big Tobacco. And not just modern Big Tobacco, but Big Tobacco in the halcyon days of the industry when they had free reign to make mildly supported hyperbolic claims about the benefits of their products.

And while the hyperbolic marketing of tests isn’t new, in recent years test publishers seem to be increasingly making claims that are only mildly supported by things that barely qualify as research. The latest example comes from ACT, with the publishing of a new survey of students opinion on GPA (strongly implying grade inflation). Before I send you to look at my notes on the survey, let me set the stage.

If I told you I was going to send a survey to 79,221 of the 1.3 million ACT test-takers in 2023 and only 1,800 of those chose to reply (a 4% response rate) what do you think that would say about the respondents? What if the survey asked these test takers about the “nonacademic” factors and perception of GPA, what would you think these ACT test takers would say? How representative of the general student population would you think they would be?

What if I told you that 21% of that group that replied was Asian (for context only 4% of ACT test takers are Asian)? What if the surveyed group was 65% female? What if 58% came from families in the top 15% of income distribution (21% of all ACT test takers reported family income above $100k)? Would it impact your perception of the usefulness of the survey if you were told that the respondents were mostly disproportionately wealthy, Asian, female and had parents with advanced degrees? Do you think that might skew the results? If i had a study with those results my methodology would be: STOP. Do not pass go. Do not collect $200.

Why would you create a report that is based on a population that isn’t representative of the group you want to report on? Unless . . . and stay with me here . . . unless the report confirmed something you wanted to sell.

But let’s set all of that aside and consider a few things:

GPA is a terrible thing to ask students perception of

When is the last time you thought your performance review was 100% accurate? Did you ever think it was too high? Of course not, we all think any ranking we receive is too low. Most students think if they didn’t get an A its not a good marker of their abilities. This report could have been an email.

GPA is a terrible thing to report on with regards to college admission

A good number of colleges recalculate GPA, so the GPA the student knows could be very different the GPA that the colleges the student is applying to is using. I can’t say what percent of colleges recalculate GPA or how since neither College Board nor ACT has published any research on this (though they publish a lot railing about the specter of grade inflation), but one admissions officer told me that at the 5 colleges she worked at they each recalculated in different ways. For example, the UCs only factor in grades from 10 and 11th from certain courses and they do it differently for in-state and out-of-state students, UIUC only looks at grades from 10 and 11th but only in 5 core academic subjects and doesn’t weigh APs or IBs (though they do use that in a “rigor” calculation), and UGA and UM use 9th through 11th grades in their recalculation. So what GPA is the GPA for student to talk about?

Perception isn’t reality

Strangely, reporting on student perception but not doing any research on what the reality actually is seems to be more fear-mongering than research. Is the typical student perception right? Are students wrong? Why do we care what students believe if we’ve not going to do the work to dispel any myths or confirm that the belief is valid?

Anyway here is the latest of the 66 reports on grade inflation that the 65-year old company ACT has on their website.

Feel free to tell me if I missed anything interesting in the report.